The Path to High Performing SQL Queries

Some tips to speed up your queries

This post is about how to optimize SQL queries with regard to yielding high performance gains. In doing so, three fundamental rules will be depicted which should generally be considered when it comes to sophisticated queries where a huge amount of data is involved. In addition to this, I will also present some methods and principles to optimize a query’s performance.

First of all it has to be said that performance in SQL queries is depending on many different aspects such as the underlying database management system (DBMS), the database’s architecture or the IT infrastructre you are working in.

Firt of all I would like to point out that the rules and optimizations measures presented are either based on experience in my professional environment or backed up by indicated literature. Furthermore, it has to be said that other DBMS might work with other data handling principles and methods. Hence, the guidelines below may do not have any influence or even lead to less performing queries. The system environment, as well as the hardware, must also be regarded as influencing factors which may temper the effect of the advice below.

Personally, I made great experiences applying these rules and optimization techniques which is why I would like to share it.

Rules

Shortly after some first tries with SQL one will realize that there exist many different ways to reach the desirable result. However, one should still be aware of some basic rules when high performance is required. Having said that the rules explained in this section cannot be regarded as a silver bullet for all queries, but one will generally not be worse off applying them.

Filtering Smalles Multitude First

Imagine a database containing two tables, one called tbl_People including names, gender and addresses of all people from the world and the other one, which is related [1:n] called tbl_Disease including all possible diseases existing. The aim now would be to show all men which live in the United States and are diagnosed with lung cancer, which in this case would be a very rare disease. Now, when constructing the query, an optimized way would be to firstly filter by the smallest multitude, in this case undoubtedly the illness, before joining the entities. This leads to a substantial reduction of records in the very first pass through. Secondly, it would be recommendable to further minimize the list so that only masculine individuals are left. Finally, one could filter for the country. The statement \eqref{eq:f1} expresses this using relational algebra notation.

In contrast to the statement \eqref{eq:f1}, notice the suboptimal version \eqref{eq:f2} below, where the two entities are joint at first and then filtered in a not ideal order:

Thrifty Column Presentation

It is all too easy to code a query with SELECT*, which then will project all columns available, regardless if they are needed or not. But as is often the case in programming, operating in an optimal way means working with only the minimum of recourses need for a certain task. Imagine a table with 150 columns which all get invoked in a query despite only three columns are actually needed. This requires far more processing power which simultaneously takes CPU power away from other tasks. Hence, only put columns in SQL statements which either are necessary for further calculation or shall be outputted for informational reasons. This will keep data processing light and affects the performance of the query in a positive way

Avoid Adhoc Queries

Adhoc is Latin and means for this purpose. Especially in MS Access, for instance, it is sometimes easier to write the SQL statement directly in the executed code than to create a predefined query and invoke it as a database access object (DAO). The reason why this is not advisable is because of the so-called execution plan which the DB will devise for each query after the first execution. Simplified expressed, it is a set of instructions which indicate how to best execute the query

Optimization

Besides rules about how to construct a query the most efficient way, performance is often also a question of the database’s architecture. Since renovation a DB is a arduous undertaking, I would like to introduce four techniques to increase query performance that do not require dangerous architectural database manipulations.

Temporary Tables

The desirable result of data selection often cannot be made within one single query. When being faced with sophisticated queries which additionally shall be displayed in the system and react to the user’s input immediately and in real time, the usage of temporary tables is highly advisable. It may be a little bit costlier to develop but it can yield impressive gains

Instead of processing an intricate SQL statement which may involve many different tables with a big relation chain over and over again, the results can initially be written in a temporary table which then will be shown to the user. After he concluded all modifications, the data can be compared and eventually written back in the appropriate tables. Besides better performance, this approach provides the advantage of being able to draw on a temporary data state when it comes to debugging.

Another use case which will be suited to temporary tables occurs when a table should be joined to a larger table, where a condition must be considered, one can gain a performance improvement by pulling out the subset of data needed from the large table into a temp table and joining with that instead

A guiding principle regarding the application of temporary tables is the characteristic that no productive data should be affected while operating with this method. Consequently, the content of these type of tables should always be considered as erasable at any time due to its intermediate purpose.

Indexes

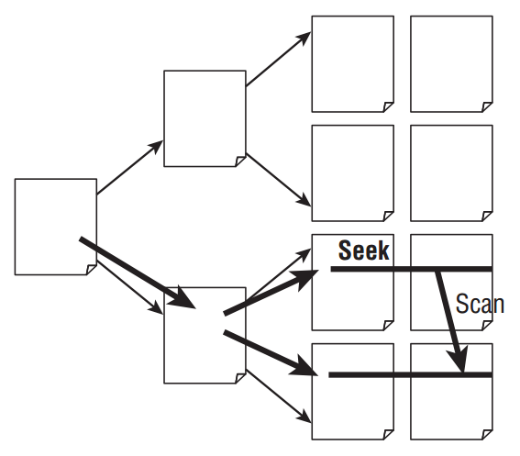

Imagine a movie collector is looking for a specific film in his personal collection. Unfortunately, he did not sort the items regarding any order which forces him to look through all DVDs he owns. This issue would not have occurred when he initially would have sorted his collection alphabetically or, even better, numbered each item and list it together with its storage location on in a book. Although this would obviously cost effort to create such a register as well as maintaining it, such a tool would surely make searching for a DVD a lot easier and faster. This analogy explains quite well the principle of indexes on tables. Applying them on important columns in a table, a so-called shadow table will be initialized in the background which then helps the database to find specific records much faster. To support the understanding of this tool better, please note the following illustration

Instead of going through all the rows in a table to find a specific record, the database is able to cut the amount of data piecemeal down. Eventually, only a small extent of all tuples must be browsed to return the searched value. In general, it is recommendable to always initiate indexes on columns which often get sorted or filtered by, especially when the column contains text instead of numbers. Furthermore, they should also be deployed on columns which are responsible for establishing the relationship between entities, in particular, Foreign Keys.

Redundancy

Data should always be stored fully normalized when constructing a database. In this section, however, I will outpoint an exception when it is more useful to actively elude the principle of storing each information only once. Doing this, we operate with so-called redundant data.

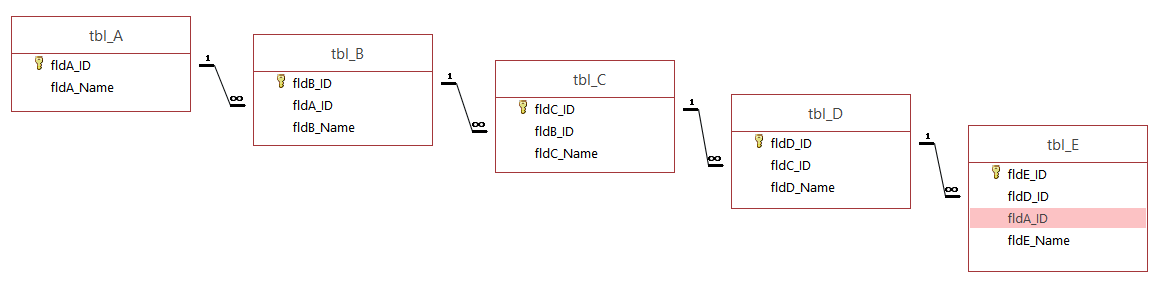

It sometimes can be very helpful to store a Primary Key in form of a copy in a table which is linked to the original table through a long relation chain as it can occur in deep hierarchies, for instance, illustrated in the figure below.

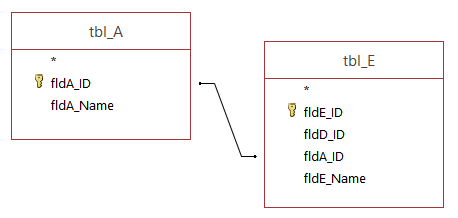

When the appropriate A-name of an E-object shall be queried, the database has to go through the entire relation chain to return the correct value. But listing the A-ID in tbl_E as a copy, provides the possibility to shorten this query procedure significantly as indicated below.

Nonetheless, it has to be said that this approach is not recommendable in data hierarchies with high alternation rate since each change has to be updated manually in the copy-field. However, in other Database Management Systems like MS SQL Server, such update transactions can be done automatically by so-called triggers

Subqueries

When a high data cascading is present, it often leads to a higher executing performance when the query is engineered with subqueries, in other words, predefined queries which then get joined to the next query. Another possibility would be to insert one SQL statement directly in the next SQL statement and save this as one query. In doing so, one has constructed a so-called nested select, which can decrease the performance of the data processing. Hence, it is generally the better approach to define only one statement per query and save them individually. Depending on the database technology you use, it would then even be possible to exactly allocate each single subquery to different server’s processors. This allows a non-sequential execution of the subqueries which means the whole process gets completed after the slowest query succeeded

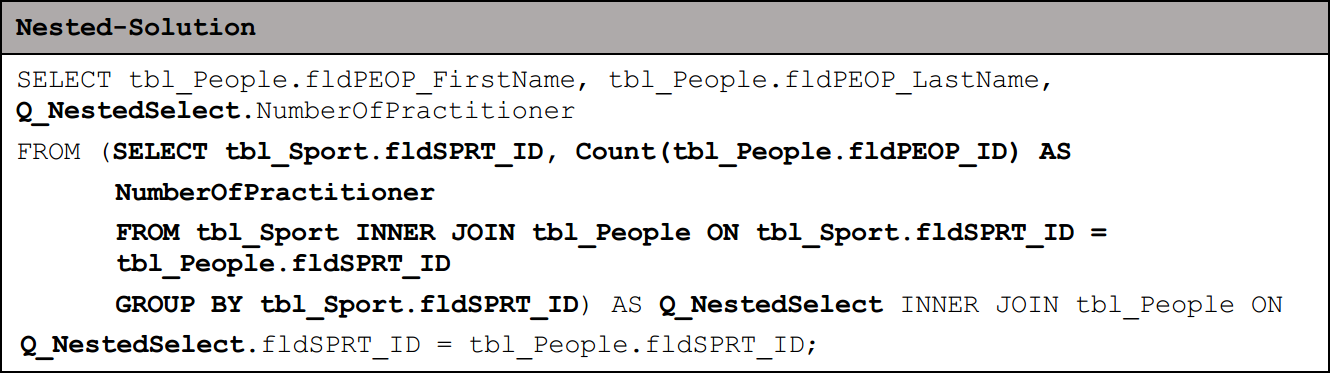

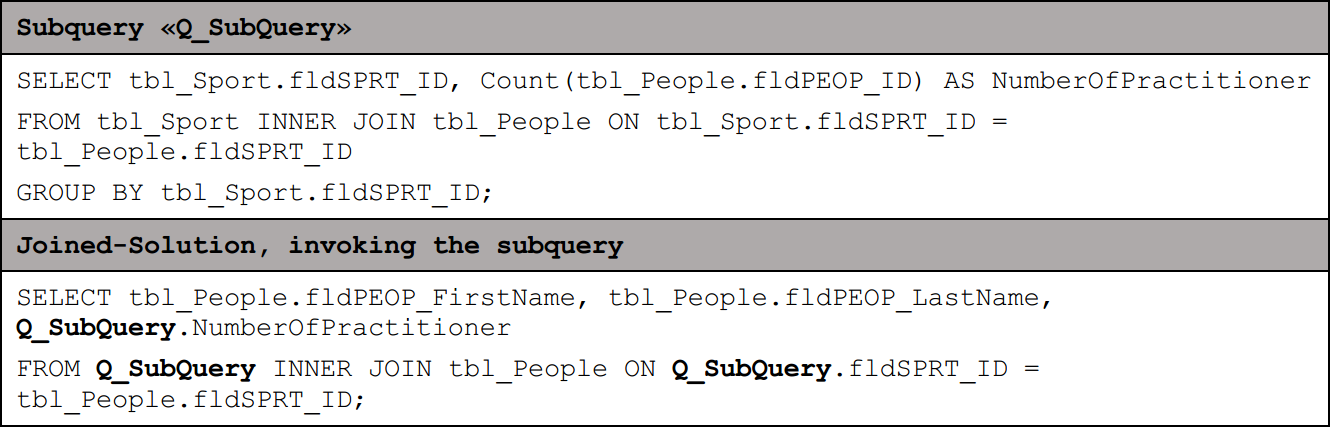

For instance, if the aim would be to return a list with all people’s first and family names including the number of other persons who are practicing the same sport as themselves. As this evaluation requires a subquery which will depict the appropriate number of participators each sport, two approaches arise regarding solve this issue.

When applying the nested select approach, the SQL statement would look like the following:

The bold section highlights the subquery which is in this case constructed in a nested way.

To visualize the more efficient way of solving the task, please consider the alternative solution below, in which the subquery gets invoked by a second query:

Conclusion

At a first glance, query performance might not seem a necessary issue to cope with but once a certain data volume is transcended, this topic will become more and more important. This article provides a small repertoire of possible optimization solutions which eventually can be adopted to its individual use case. Nonetheless, it has to be said that I only touched the surface of database science. Considering all the numerous different database management systems somehow operate differently than others, it would hardly be possible to write down a master solution for high performing SQL queries. Anyways, you now know about some basic tools to tune your SQL statments in order to decrease waiting times.